torch2onnx SequenceConstruct#

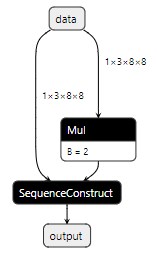

SequenceConstruct: 构建包含 inputs 张量的张量序列。inputs 中的所有张量必须具有相同的数据类型。

%cd ../../..

import set_env

from d2py.utils.file import mkdir

root_dir = ".temp"

mkdir(root_dir)

/media/pc/data/lxw/ai/tvm-book/doc/tutorials/frontend

import torch

from torch.onnx import OperatorExportTypes, utils

class PrimModule(torch.jit.ScriptModule):

@torch.jit.script_method

def forward(self, x):

return [x, x*2]

model = PrimModule()

model.eval()

shape = 1, 3, 8, 8

x = torch.rand(*shape, dtype=torch.float32, requires_grad=False)

model = PrimModule()

# 导出模型

output_name = "SequenceConstruct"

utils.export(

model, # torch 模型

x, # 模型输入或者对于多个输入,使用元组

f"{root_dir}/{output_name}.onnx", # 模型保存的位置(可以是文件或类似文件的对象)

export_params=True, # 将训练后的参数权重存储在模型文件内

opset_version=17, # 导出模型的 ONNX 版本

do_constant_folding=True, # 是否执行常量折叠以进行优化

input_names = ['data'], # 模型的输入名称

output_names = ['output'], # 模型的输出名称

keep_initializers_as_inputs=True,

# export_modules_as_functions=True,

verbose=True,

operator_export_type=OperatorExportTypes.ONNX_FALLTHROUGH,

# dynamic_axes={'data' : {0 : 'batch_size'}, # 可变长度的轴

# 'output' : {0 : 'batch_size'}}

)

Exported graph: graph(%data : Float(1, 3, 8, 8, strides=[192, 64, 8, 1], requires_grad=0, device=cpu)):

%/Constant_output_0 : Float(requires_grad=0, device=cpu) = onnx::Constant[value={2}, onnx_name="/Constant"](), scope: PrimModule:: # /tmp/ipykernel_2083243/2703021558.py:6:19

%/Mul_output_0 : Float(1, 3, 8, 8, strides=[192, 64, 8, 1], device=cpu) = onnx::Mul[onnx_name="/Mul"](%data, %/Constant_output_0), scope: PrimModule:: # /tmp/ipykernel_2083243/2703021558.py:6:19

%output : Float(1, 3, 8, 8, strides=[192, 64, 8, 1], device=cpu)[] = onnx::SequenceConstruct[onnx_name="/SequenceConstruct"](%data, %/Mul_output_0), scope: PrimModule::

return (%output)

import onnx

import tvm

from tvm import relay

onnx_model = onnx.load(f"{root_dir}/{output_name}.onnx")

mod, params = relay.frontend.from_onnx(onnx_model, {"data": shape}, freeze_params=True)

with tvm.transform.PassContext(opt_level=3):

mod = relay.quantize.prerequisite_optimize(mod, params)

mod.show()

def @main(%data: Tensor[(1, 3, 8, 8), float32] /* ty=Tensor[(1, 3, 8, 8), float32] span=/Mul.data:0:0 */) -> (Tensor[(1, 3, 8, 8), float32], Tensor[(1, 3, 8, 8), float32]) {

%0 = multiply(%data, 2f /* ty=float32 span=/Constant:0:0 */) /* ty=Tensor[(1, 3, 8, 8), float32] span=/Mul:0:0 */;

(%data, %0) /* ty=(Tensor[(1, 3, 8, 8), float32], Tensor[(1, 3, 8, 8), float32]) span=/SequenceConstruct:0:0 */

}