silu 函数#

对于定义域 \(\mathbb{R}\) 中的输入,silu 函数,定义如下:

\[

\operatorname{SiLU}(x) = \frac{x}{1 + \exp(-x)} = x \cdot \operatorname{sigmoid}(x).

\]

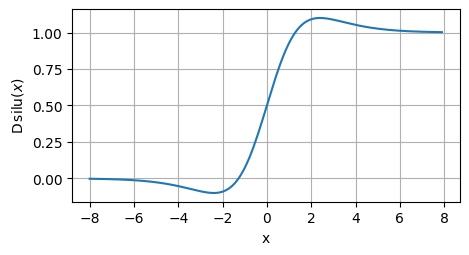

import torch

from torch_book.plotx.utils import plot

x = torch.arange(-8.0, 1.0, 0.1, requires_grad=True)

y = torch.nn.functional.silu(x)

plot(x.detach(), y.detach(), 'x', 'silu(x)', figsize=(5, 2.5))

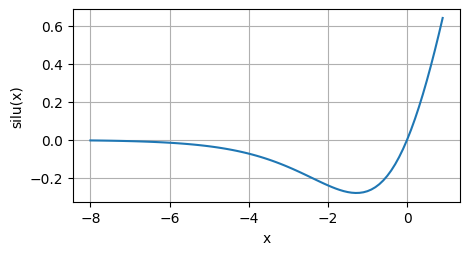

x = torch.arange(-8.0, 8.0, 0.1, requires_grad=True)

y = torch.nn.functional.silu(x)

plot(x.detach(), y.detach(), 'x', 'silu(x)', figsize=(5, 2.5))

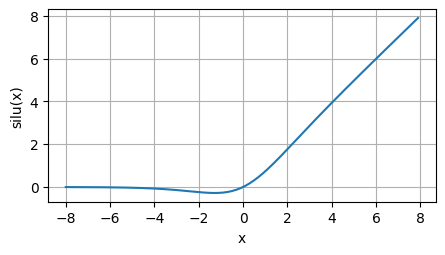

可视化导函数:

y.backward(torch.ones_like(x),retain_graph=True)

plot(x.detach(), x.grad, 'x', '$\operatorname{D} \operatorname{silu}(x)$', figsize=(5, 2.5))