理论

有矩阵 $\mathbf{A} = [\mathbf{a}_1, \mathbf{a}_2, \cdots, \mathbf{a}_m]^T \in \mathbb{R}^{m \times n}$,和向量 $\mathbf{x} = [x_1, x_2, \cdots, x_n]^T \in \mathbb{R}^{n}$,则有:

又有:

所以,

这样,有:

即:

下面看一个例子:

一个例子

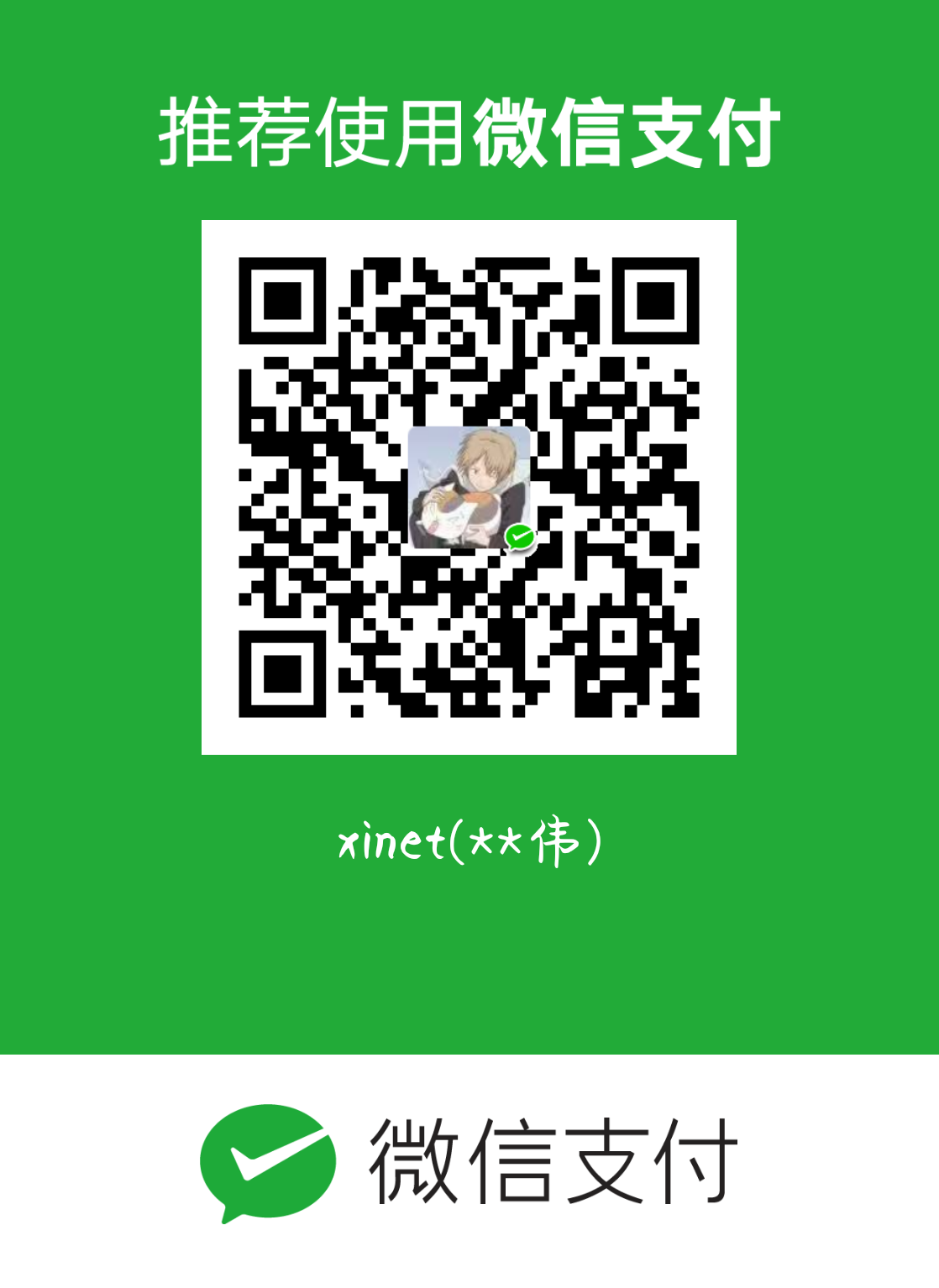

from xint import utils

from xint import mxnet as xint

np = xint.npfrom xint import utils

from xint import tensorflow as xint

np = xint.npfrom xint import utils

from xint import torch as xint

np = xint.np创建张量 $\mathbf{x}$:

x = np.arange(4.0).reshape(4, 1)计算函数 $y = 2\mathbf{x}^{\top}\mathbf{x}$ 的梯度:

# 我们通过调用`attach_grad`来为一个张量的梯度分配内存

x.attach_grad()

# 在我们计算关于`x`的梯度后,我们将能够通过'grad'属性访问它,它的值被初始化为0

x.gradx = tf.Variable(x)x.requires_grad_(True) # 等价于 `x = torch.arange(4.0, requires_grad=True)`

x.grad # 默认值是None现在让计算 $y$:

from mxnet import autograd

# 把代码放到`autograd.record`内,以建立计算图

with autograd.record():

y = 2 * x.T @ x

float(y)# 把所有计算记录在磁带上

with tf.GradientTape() as t:

y = 2 * tf.transpose(x) @ x

float(y)y = 2 * x.T @ x

float(y)接下来,我们可以通过调用反向传播函数来自动计算 $y$ 关于 $\mathbf{x}$ 每个分量的梯度,并打印这些梯度:

y.backward()

x.grad# 把所有计算记录在磁带上

with tf.GradientTape() as t:

y = 2 * tf.transpose(x) @ x

float(y)y.backward()

x.grad可以计算 $\mathbf{x}$ 的另一个函数:

with autograd.record():

y = x.sum()

y.backward()

x.grad # 被新计算的梯度覆盖with tf.GradientTape() as t:

y = tf.reduce_sum(x)

t.gradient(y, x) # 被新计算的梯度覆盖# 在默认情况下,PyTorch会累积梯度,我们需要清除之前的值

x.grad.zero_()

y = x.sum()

y.backward()

x.grad注意:对于非标量变量的反向传播,MXNet/TensorFlow 直接调用相应的函数即可获得梯度,但是 Pytorch 不支持直接对非标量进行反向传播,故而需要先对其求和,再求梯度。比如:

# 当我们对向量值变量`y`(关于`x`的函数)调用`backward`时,

# 将通过对`y`中的元素求和来创建一个新的标量变量。然后计算这个标量变量相对于`x`的梯度

with autograd.record():

y = x * x # `y`是一个向量

y.backward()

x.grad # 等价于y = sum(x * x)with tf.GradientTape() as t:

y = x * x

t.gradient(y, x) # 等价于 `y = tf.reduce_sum(x * x)`# 对非标量调用`backward`需要传入一个`gradient`参数,该参数指定微分函数关于`self`的梯度。

## 在我们的例子中,我们只想求偏导数的和,所以传递一个1的梯度是合适的

x.grad.zero_()

y = x * x

# 等价于y.backward(torch.ones(len(x)))

y.sum().backward()

x.grad分离计算

有时,我们希望将某些计算移动到记录的计算图之外。例如,假设 $\mathbf{y}$ 是作为 $\mathbf{x}$ 的函数计算的,而 $\mathbf{z}$ 则是作为 $\mathbf{y}$ 和 $\mathbf{x}$ 的函数计算的。现在,想象一下,我们想计算 $\mathbf{z}$ 关于 $\mathbf{x}$ 的梯度,但由于某种原因,我们希望将 $\mathbf{y}$ 视为一个常数,并且只考虑到 $\mathbf{x}$ 在 $\mathbf{y}$ 被计算后发挥的作用。

在这里,我们可以分离 $\mathbf{y}$ 来返回一个新变量 $u$,该变量与 $\mathbf{y}$ 具有相同的值,但截断计算图中关于如何计算 $\mathbf{y}$ 的任何信息。换句话说,梯度不会向后流经 $u$ 到 $\mathbf{x}$。因此,下面的反向传播函数计算 $\mathbf{z} = u * \mathbf{x}$ 关于 $\mathbf{x}$ 的偏导数,同时将 $u$ 作为常数处理,而不是 $\mathbf{z} = \mathbf{x} * \mathbf{x} * \mathbf{x}$ 关于 $\mathbf{x}$ 的偏导数。

with autograd.record():

y = x * x

u = y.detach()

z = u * x

z.backward()

x.grad == u# 设置 `persistent=True` 来运行 `t.gradient`多次

with tf.GradientTape(persistent=True) as t:

y = x * x

u = tf.stop_gradient(y)

z = u * x

x_grad = t.gradient(z, x)

x_grad == ux.grad.zero_()

y = x * x

u = y.detach()

z = u * x

z.sum().backward()

x.grad == u通用微分函数

令 $f: \mathbb{R}^n \rightarrow \mathbb{R}$,$\mathbf{x} = [x_1, x_2, \ldots, x_n]^\top$,有

若有 $\mathbf{y} = [y_1, y_2, \ldots, y_m]^\top$,$x \in \mathbb{R}$,则:

还有,